基于CentOS 7.5 1804

设置yum源 在全部控制与计算节点设置epel与ceph yum源(base yum源已更新),以ceph205节点为例;

epel:http://mirrors.aliyun.com/repo/

1 [root@ceph205 ~ ]# wget - O /etc/ yum.repos.d/epel-7.repo http:/ /mirrors.aliyun.com/ repo/ epel- 7 .repo

ceph:http://mirrors.aliyun.com/ceph/

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 [root@ceph205 ~] [ceph] name =cephbaseurl =http://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64 /enabled =1 gpgcheck =1 type =rpm-mdgpgkey =http://mirrors.aliyun.com/ceph/keys/release.asc[ceph-noarch] name =cephnoarchbaseurl =http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch/enabled =1 gpgcheck =1 type =rpm-mdgpgkey =http://mirrors.aliyun.com/ceph/keys/release.asc[ceph-source] name =ceph-sourcebaseurl =http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS/enabled =1 gpgcheck =1 type =rpm-mdgpgkey =http://mirrors.aliyun.com/ceph/keys/release.asc[root@ceph205 ~] [root@ceph205 ~]

设置主机名 所有节点设置相应主机名即可,以ceph205节点为例;

1 2 3 4 5 6 7 [root@ceph205 ~] $ cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain41 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.1.201 controller192.168.1.202 compute202192.168.1.205 ceph205

设置ntp 所有节点保持时钟同步,配置ceph205节点为时钟服务器

所有节点保持时钟同步,

1 2 server controller iburstallow 192.168.1.0 /24

所有节点服务重启,并查看同步状态

1 2 systemctl restart chronyd.service

关闭防火墙selinux 1 2 3 [root@ceph205 ~]# systemctl stop firewalld @ceph205 ~]# systemctl disable firewalld @ceph205 ~]# setenforce 0

创建用户 所有节点执行

1 2 3 4 [root@ceph205 ~]123456 new password: 123456

1 2 3 4 # 修改visudo文件,使cephde用户在sudo列表中;92 行” root ALL = (ALL ) ALL ”下新增一行:” cephde ALL = (ALL ) ALL ”@ceph205 ~ ]# visudoALL = (ALL ) ALL

用户赋权 设置cephde用户具备无密码sudo(root)权限;

1 2 3 4 [root@ceph205 ~]echo "cephde ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephdefor cephde:123456chmod 0440 /etc/sudoers.d/cephde

设置ssh免密登陆 ceph-deploy不支持密码输入,需要在管理控制节点生产ssh秘钥,并将公钥分发到各ceph节点;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root@ceph205 ~]@ceph205 ~]$ ssh-keygen -t rsa@ceph205 .. +...=o. . | .oo+.=oo o . . | +o.o=o. . o o | . oo.=oo . + E | ...o.S . . + | . =o . o | o ++. . | . ..o+ . | .ooo.. |

分发密钥 前提是各控制与存储节点已生成相关用户;

1 2 3 [cephde@ceph205 ~]$ ssh-copy-id cephde@ceph206to continue connecting (yes /no )? yes 's password:

安装ceph-deploy 在规划的控制管理节点安装ceph-deploy工具,以ceph205节点为例,这里我只把ceph205规划为管理节点。

创建ceph集群 在cephde账户下操作,切忌使用sudo操作;

1 2 [root@ceph205 ~]@ceph205 ~]$ mkdir cephcluster

后续ceph-deploy相关操作全部在所创建的目录执行;

1 2 3 4 5 6 7 8 9 10 [cephde@ceph205 ~]$ cd ~/cephcluster/ @ceph205 cephcluster]$ ceph-deploy new ceph205@ceph205 cephcluster]$ ceph-deploy new ceph205Traceback (most recent call last):File "/bin/ceph-deploy" , line 18 , in <module >from ceph_deploy.cli import mainFile "/usr/lib/python2.7/site-packages/ceph_deploy/cli.py" , line 1 , in <module >import pkg_resourcesImportError : No module named pkg_resources

原因:缺少python-setuptools安装包

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/ceph/.cephdeploy.confINFO ] Invoked (2.0.1): /bin/ceph-deploy new ceph205INFO ] ceph-deploy options:INFO ] username : NoneINFO ] func : <function new at 0x7f9eeac24d70>INFO ] verbose : False INFO ] overwrite_conf : False INFO ] quiet : False INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9eea39e3b0>INFO ] cluster : cephINFO ] ssh_copykey : True INFO ] mon : ['ceph205' ]INFO ] public_network : NoneINFO ] ceph_conf : NoneINFO ] cluster_network : NoneINFO ] default_release : False INFO ] fsid : NoneDEBUG ] Creating new cluster named cephINFO ] making sure passwordless SSH succeedsDEBUG ] connection detected need for sudoDEBUG ] connected to host: ceph205 DEBUG ] detect platform information from remote hostDEBUG ] detect machine type DEBUG ] find the location of an executableINFO ] Running command: sudo /usr/sbin/ip link showINFO ] Running command: sudo /usr/sbin/ip addr showDEBUG ] IP addresses found: [u'192.168.1.205' ]DEBUG ] Resolving host ceph205DEBUG ] Monitor ceph205 at 192.168.1.205DEBUG ] Monitor initial members are ['ceph205' ]DEBUG ] Monitor addrs are ['192.168.1.205' ]DEBUG ] Creating a random mon key.. .DEBUG ] Writing monitor keyring to ceph.mon.ke

修改集群配置文件(optional) 生成集群后在集群目录下生成3个文件,其中ceph.conf即是配置文件;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 [cephde@ceph205 cephcluster]$ cat ceph.conf

安装ceph 在全部控制管理与存储节点安装ceph;

1 [root@ceph205 ~]# yum install -y ceph ceph-radosgw

初始化ceph_mon 在控制管理节点初始化monitor

1 [cephde@ceph205 cephcluster]$ ceph- deploy mon create - initial

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 [ceph_deploy.conf][DEBUG ] found configuration file at: /home/ceph/.cephdeploy.confINFO ] Invoked (2.0.1): /bin/ceph-deploy mon create-initialINFO ] ceph-deploy options:INFO ] username : NoneINFO ] verbose : False INFO ] overwrite_conf : False INFO ] subcommand : create-initialINFO ] quiet : False INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f5de121ed40>INFO ] cluster : cephINFO ] func : <function mon at 0x7f5de1275398>INFO ] ceph_conf : NoneINFO ] default_release : False INFO ] keyrings : NoneDEBUG ] Deploying mon, cluster ceph hosts ceph205DEBUG ] detecting platform for host ceph205 .. .DEBUG ] connection detected need for sudoDEBUG ] connected to host: ceph205 DEBUG ] detect platform information from remote hostDEBUG ] detect machine type DEBUG ] find the location of an executableINFO ] distro info: CentOS Linux 7.5.1804 CoreDEBUG ] determining if provided host has same hostname in remoteDEBUG ] get remote short hostnameDEBUG ] deploying mon to ceph205DEBUG ] get remote short hostnameDEBUG ] remote hostname: ceph205DEBUG ] write cluster configuration to /etc/ceph/{cluster}.confDEBUG ] create the mon path if it does not existDEBUG ] checking for done path: /var/lib/ceph/mon/ceph-ceph205/doneDEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-ceph205/doneINFO ] creating keyring file: /var/lib/ceph/tmp/ceph-ceph205.mon.keyringDEBUG ] create the monitor keyring fileINFO ] Running command: sudo ceph-mon --cluster ceph --mkfs -i ceph205 --keyring /var/lib/ceph/tmp/ceph-ceph205.mon.keyring --setuser 167 --setgroup 167INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-ceph205.mon.keyringDEBUG ] create a done file to avoid re-doing the mon deploymentDEBUG ] create the init path if it does not existINFO ] Running command: sudo systemctl enable ceph.targetINFO ] Running command: sudo systemctl enable ceph-mon@ceph205from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@ceph205.service to /usr/lib/systemd/system/ceph-mon@.service.INFO ] Running command: sudo systemctl start ceph-mon@ceph205INFO ] Running command: sudo ceph --cluster =ceph --admin-daemon /var/run/ceph/ceph-mon.ceph205.asok mon_statusDEBUG ] ********************************************************************************DEBUG ] status for monitor: mon.ceph205DEBUG ] {DEBUG ] "election_epoch" : 3, DEBUG ] "extra_probe_peers" : [], DEBUG ] "feature_map" : {DEBUG ] "mon" : {DEBUG ] "group" : {DEBUG ] "features" : "0x3ffddff8eeacfffb" , DEBUG ] "num" : 1, DEBUG ] "release" : "luminous" DEBUG ] }DEBUG ] }DEBUG ] }, DEBUG ] "features" : {DEBUG ] "quorum_con" : "4611087853746454523" , DEBUG ] "quorum_mon" : [DEBUG ] "kraken" , DEBUG ] "luminous" DEBUG ] ], DEBUG ] "required_con" : "153140804152475648" , DEBUG ] "required_mon" : [DEBUG ] "kraken" , DEBUG ] "luminous" DEBUG ] ]DEBUG ] }, DEBUG ] "monmap" : {DEBUG ] "created" : "2022-03-13 21:57:15.211494" , DEBUG ] "epoch" : 1, DEBUG ] "features" : {DEBUG ] "optional" : [], DEBUG ] "persistent" : [DEBUG ] "kraken" , DEBUG ] "luminous" DEBUG ] ]DEBUG ] }, DEBUG ] "fsid" : "b6904020-ab52-497b-9078-c130310853bb" , DEBUG ] "modified" : "2022-03-13 21:57:15.211494" , DEBUG ] "mons" : [DEBUG ] {DEBUG ] "addr" : "192.168.1.205:6789/0" , DEBUG ] "name" : "ceph205" , DEBUG ] "public_addr" : "192.168.1.205:6789/0" , DEBUG ] "rank" : 0DEBUG ] }DEBUG ] ]DEBUG ] }, DEBUG ] "name" : "ceph205" , DEBUG ] "outside_quorum" : [], DEBUG ] "quorum" : [DEBUG ] 0DEBUG ] ], DEBUG ] "rank" : 0, DEBUG ] "state" : "leader" , DEBUG ] "sync_provider" : []DEBUG ] }DEBUG ] ********************************************************************************INFO ] monitor: mon.ceph205 is runningINFO ] Running command: sudo ceph --cluster =ceph --admin-daemon /var/run/ceph/ceph-mon.ceph205.asok mon_statusINFO ] processing monitor mon.ceph205DEBUG ] connection detected need for sudoDEBUG ] connected to host: ceph205 DEBUG ] detect platform information from remote hostDEBUG ] detect machine type DEBUG ] find the location of an executableINFO ] Running command: sudo ceph --cluster =ceph --admin-daemon /var/run/ceph/ceph-mon.ceph205.asok mon_statusINFO ] mon.ceph205 monitor has reached quorum!INFO ] all initial monitors are running and have formed quorumINFO ] Running gatherkeys.. .INFO ] Storing keys in temp directory /tmp/tmpJsXF9oDEBUG ] connection detected need for sudoDEBUG ] connected to host: ceph205 DEBUG ] detect platform information from remote hostDEBUG ] detect machine type DEBUG ] get remote short hostnameDEBUG ] fetch remote fileINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --admin-daemon =/var/run/ceph/ceph-mon.ceph205.asok mon_statusINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get client.adminINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get-or-create client.admin osd allow * mds allow * mon allow * mgr allow *INFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get client.bootstrap-mdsINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get-or-create client.bootstrap-mds mon allow profile bootstrap-mdsINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get client.bootstrap-mgrINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get-or-create client.bootstrap-mgr mon allow profile bootstrap-mgrINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get client.bootstrap-osdINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get-or-create client.bootstrap-osd mon allow profile bootstrap-osdINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get client.bootstrap-rgwINFO ] Running command: sudo /usr/bin/ceph --connect-timeout =25 --cluster =ceph --name mon. --keyring =/var/lib/ceph/mon/ceph-ceph205/keyring auth get-or-create client.bootstrap-rgw mon allow profile bootstrap-rgwINFO ] Storing ceph.client.admin.keyringINFO ] Storing ceph.bootstrap-mds.keyringINFO ] Storing ceph.bootstrap-mgr.keyringINFO ] keyring 'ceph.mon.keyring' already existsINFO ] Storing ceph.bootstrap-osd.keyringINFO ] Storing ceph.bootstrap-rgw.keyringINFO ] Destroy temp directory /tmp/tmpJsXF9o

初始化完成后,在集群目录下新增多个秘钥文件

1 2 3 4 5 6 7 8 9 10 [cephde@ceph205 cephcluster]$ ls -l 1 cephde cephde 71 Mar 13 21:57 ceph.bootstrap-mds.keyring 1 cephde cephde 71 Mar 13 21:57 ceph.bootstrap-mgr.keyring 1 cephde cephde 71 Mar 13 21:57 ceph.bootstrap-osd.keyring 1 cephde cephde 71 Mar 13 21:57 ceph.bootstrap-rgw.keyring 1 cephde cephde 63 Mar 13 21:57 ceph.client.admin.keyring 1 cephde cephde 319 Mar 13 21:51 ceph.conf 1 cephde cephde 15527 Mar 13 21:57 ceph-deploy-ceph.log 1 cephde cephde 73 Mar 13 21:45 ceph.mon.keyring

查看状态

1 2 3 4 5 6 7 8 9 10 [cephde@ceph205 cephcluster]$ sudo system ctl status ceph-mon@ceph205 monitor daemon system d[1]: Started Ceph cluster monitor daemon. system d[1]: Starting Ceph cluster monitor daemon...

分发ceph.conf与秘钥 分发ceph配置文件与秘钥到其他控制管理节点与存储节点;

分发的配置文件与秘钥在各节点/etc/ceph/目录

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 [cephde@ceph205 cephcluster]$ ceph-deploy admin ceph205DEBUG ] found configuration file at: /home/ceph/.cephdeploy.confINFO ] Invoked (2.0.1): /bin/ceph-deploy admin ceph205INFO ] ceph-deploy options:INFO ] username : NoneINFO ] verbose : False INFO ] overwrite_conf : False INFO ] quiet : False INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f58e80d52d8>INFO ] cluster : cephINFO ] client : ['ceph205' ]INFO ] func : <function admin at 0x7f58e8be51b8>INFO ] ceph_conf : NoneINFO ] default_release : False DEBUG ] Pushing admin keys and conf to ceph205DEBUG ] connection detected need for sudoDEBUG ] connected to host: ceph205 DEBUG ] detect platform information from remote hostDEBUG ] detect machine type DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

安装ceph_mgr luminous版本必须安装mgr(dashboard)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 $ ceph-deploy mgr create ceph205:ceph205_mgr

查看状态

1 2 3 4 5 6 7 [cephde@ceph205 cephcluster]$ systemctl status ceph-mgr@ceph205_mgrcluster manager daemonsystem /ceph-mgr@.service; enabled; vendor preset: disabled)2022 -03 -13 22 :01 :43 EDT; 36 s ago13048 (ceph-mgr)system .slice/system -ceph\x2dmgr.slice/ceph-mgr@ceph205_mgr.service13048 /usr/bin/ceph-mgr -f --cluster ceph --id ceph205_mgr --setuser ceph --setgroup ...

启动mgr 可查看mgr默认开启的服务:(sudo) ceph mgr module ls;

1 [cephde@ceph205 cephcluster]$ sudo ceph mgr module enable dashboard

dashboard服务已开启,默认监听全部地址的tcp7000端口;

1 [cephde@ceph205 cephcluster]$ sudo netstat -tunlp | grep mgr

没有netstat工具的先安装net-tools

1 2 3 [cephde@ceph205 cephcluster]$ sudo netstat -tunlp | grep mgr0 0 192.168 .1.205 : 6800 0.0 .0.0 :* LISTEN 13048 /ceph-mgr 0 0 ::: 7000 :::* LISTEN 13048 /ceph-mgr

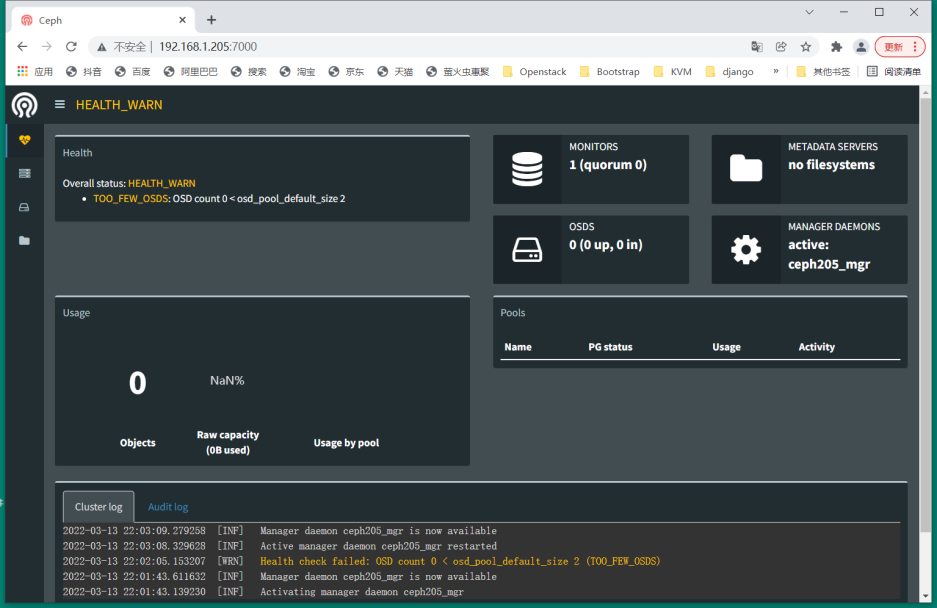

web登陆: http://192.168.1.205:7000/

查看集群状态 查看monitor状态

1 2 [cephde@ceph205 cephcluster]$ sudo ceph mon stat 1 mons at {ceph205=192.168.1.205:6789/0}, election epoch 3, leader 0 ceph205, quorum 0 ceph205

查看ceph状态:ceph health (detail),ceph -s,ceph -w等;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 [cephde@ceph205 cephcluster]$ sudo ceph -s cluster : id : b6904020-ab52-497b-9078-c130310853bb health : HEALTH_WARN OSD count 0 < osd_pool_default_size 2 services : mon : 1 daemons, quorum ceph205 mgr : ceph205_mgr(active) osd : 0 osds: 0 up, 0 in data : pools : 0 pools, 0 pgs objects : 0 objects, 0B usage : 0B used, 0B / 0B avail pgs :

可在各节点查看认证信息等

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 [cephde@ceph205 cephcluster]$ sudo ceph auth list installed auth entries : client.admin key : AQD/oC5i6dI/CxAAIR4LRDD5lp4zRYi7tAsMAA== caps : [mds] allow * caps : [mgr] allow * caps : [mon] allow * caps : [osd] allow * client.bootstrap-mds key : AQD/oC5iUfrENhAA+7kkfqcDNWsiIBrEmtRtnQ== caps : [mon] allow profile bootstrap-mds client.bootstrap-mgr key : AQAAoS5iwaskJxAA6/AHsOW5EcWhwRSCVmGCkw== caps : [mon] allow profile bootstrap-mgr client.bootstrap-osd key : AQABoS5iXEqJGRAAnHW4Li928UaUAreGLvdyWw== caps : [mon] allow profile bootstrap-osd client.bootstrap-rgw key : AQACoS5i0NZ9ERAA71OfRtQSyv9ar1ROTFEYWA== caps : [mon] allow profile bootstrap-rgw mgr.ceph205_mgr key : AQAGoi5iEjbiMhAACS7iZgSN35Rzu1O7ojvneg== caps : [mds] allow * caps : [mon] allow profile mgr caps : [osd] allow *

创建osd(存储) osd位于存储节点,可查看存储节点磁盘状况,以ceph205节点为例

1 2 3 4 5 6 7 8 9 [cephde@ceph205 cephcluster]$ lsblk 0 20G 0 disk 0 1G 0 part /boot 0 19G 0 part 0 17G 0 lvm / 0 2G 0 lvm [SWAP] 0 100G 0 disk 1 1024M 0 rom

实际创建osd时,可通过管理节点使用ceph-deploy创建;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 [cephde@ceph205 cephcluster] $ ceph-deploy osd create ceph205 --data /dev/sdb[ceph_deploy.conf] [DEBUG ] found configuration file at: /home/ceph/.cephdeploy .conf [ceph_deploy.cli] [INFO ] Invoked (2.0 .1 ): /bin/ceph-deploy osd create ceph205 --data /dev/sdb[ceph_deploy.cli] [INFO ] ceph-deploy options:[ceph_deploy.cli] [INFO ] verbose : False[ceph_deploy.cli] [INFO ] bluestore : None[ceph_deploy.cli] [INFO ] cd_conf : <ceph_deploy.conf .cephdeploy .Conf instance at 0 x7fec16c56320>[ceph_deploy.cli] [INFO ] cluster : ceph[ceph_deploy.cli] [INFO ] fs_type : xfs[ceph_deploy.cli] [INFO ] block_wal : None[ceph_deploy.cli] [INFO ] default_release : False[ceph_deploy.cli] [INFO ] username : None[ceph_deploy.cli] [INFO ] journal : None[ceph_deploy.cli] [INFO ] subcommand : create[ceph_deploy.cli] [INFO ] host : ceph205[ceph_deploy.cli] [INFO ] filestore : None[ceph_deploy.cli] [INFO ] func : <function osd at 0 x7fec16c8a848>[ceph_deploy.cli] [INFO ] ceph_conf : None[ceph_deploy.cli] [INFO ] zap_disk : False[ceph_deploy.cli] [INFO ] data : /dev/sdb[ceph_deploy.cli] [INFO ] block_db : None[ceph_deploy.cli] [INFO ] dmcrypt : False[ceph_deploy.cli] [INFO ] overwrite_conf : False[ceph_deploy.cli] [INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys[ceph_deploy.cli] [INFO ] quiet : False[ceph_deploy.cli] [INFO ] debug : False[ceph_deploy.osd] [DEBUG ] Creating OSD on cluster ceph with data device /dev/sdb[ceph205] [DEBUG ] connection detected need for sudo[ceph205] [DEBUG ] connected to host: ceph205 [ceph205] [DEBUG ] detect platform information from remote host[ceph205] [DEBUG ] detect machine type[ceph205] [DEBUG ] find the location of an executable[ceph_deploy.osd] [INFO ] Distro info: CentOS Linux 7.5 .1804 Core[ceph_deploy.osd] [DEBUG ] Deploying osd to ceph205[ceph205] [DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf [ceph205] [WARNIN] osd keyring does not exist yet, creating one[ceph205] [DEBUG ] create a keyring file[ceph205] [DEBUG ] find the location of an executable[ceph205] [INFO ] Running command: sudo /usr/sbin/ceph-volume --cluster ceph lvm create --bluestore --data /dev/sdb[ceph205] [WARNIN] Running command: /bin/ceph-authtool --gen-print-key [ceph205] [WARNIN] Running command: /bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring -i - osd new 07 ec31da-a1ba-48 ee-a833-37 a6eba0615f[ceph205] [WARNIN] Running command: vgcreate --force --yes ceph-5605 d7c5-8 a23-4 e96-ba0d-6633 fcdfde09 /dev/sdb[ceph205] [WARNIN] stdout: Physical volume "/dev/sdb" successfully created.[ceph205] [WARNIN] stdout: Volume group "ceph-5605d7c5-8a23-4e96-ba0d-6633fcdfde09" successfully created[ceph205] [WARNIN] Running command: lvcreate --yes -l 100% FREE -n osd-block-07 ec31da-a1ba-48 ee-a833-37 a6eba0615f ceph-5605 d7c5-8 a23-4 e96-ba0d-6633 fcdfde09[ceph205] [WARNIN] stdout: Logical volume "osd-block-07ec31da-a1ba-48ee-a833-37a6eba0615f" created.[ceph205] [WARNIN] Running command: /bin/ceph-authtool --gen-print-key [ceph205] [WARNIN] Running command: mount -t tmpfs tmpfs /var/lib/ceph/osd/ceph-0 [ceph205] [WARNIN] Running command: restorecon /var/lib/ceph/osd/ceph-0 [ceph205] [WARNIN] Running command: chown -h ceph:ceph /dev/ceph-5605 d7c5-8 a23-4 e96-ba0d-6633 fcdfde09/osd-block-07 ec31da-a1ba-48 ee-a833-37 a6eba0615f[ceph205] [WARNIN] Running command: chown -R ceph:ceph /dev/dm-2 [ceph205] [WARNIN] Running command: ln -s /dev/ceph-5605 d7c5-8 a23-4 e96-ba0d-6633 fcdfde09/osd-block-07 ec31da-a1ba-48 ee-a833-37 a6eba0615f /var/lib/ceph/osd/ceph-0 /block[ceph205] [WARNIN] Running command: ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/lib/ceph/osd/ceph-0 /activate.monmap [ceph205] [WARNIN] stderr: got monmap epoch 1 [ceph205] [WARNIN] Running command: ceph-authtool /var/lib/ceph/osd/ceph-0 /keyring --create-keyring --name osd.0 --add-key AQBipC5i8TYVNBAAmu4r3BrEFQF+gdSB5VgM9w==[ceph205] [WARNIN] stdout: creating /var/lib/ceph/osd/ceph-0 /keyring[ceph205] [WARNIN] added entity osd.0 auth auth (auid = 18446744073709551615 key=AQBipC5i8TYVNBAAmu4r3BrEFQF+gdSB5VgM9w== with 0 caps)[ceph205] [WARNIN] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 /keyring[ceph205] [WARNIN] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 /[ceph205] [WARNIN] Running command: /bin/ceph-osd --cluster ceph --osd-objectstore bluestore --mkfs -i 0 --monmap /var/lib/ceph/osd/ceph-0 /activate.monmap --keyfile - --osd-data /var/lib/ceph/osd/ceph-0 / --osd-uuid 07 ec31da-a1ba-48 ee-a833-37 a6eba0615f --setuser ceph --setgroup ceph[ceph205] [WARNIN] --> ceph-volume lvm prepare successful for : /dev/sdb[ceph205] [WARNIN] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph205] [WARNIN] Running command: ceph-bluestore-tool --cluster =ceph prime-osd-dir --dev /dev/ceph-5605 d7c5-8 a23-4 e96-ba0d-6633 fcdfde09/osd-block-07 ec31da-a1ba-48 ee-a833-37 a6eba0615f --path /var/lib/ceph/osd/ceph-0 [ceph205] [WARNIN] Running command: ln -snf /dev/ceph-5605 d7c5-8 a23-4 e96-ba0d-6633 fcdfde09/osd-block-07 ec31da-a1ba-48 ee-a833-37 a6eba0615f /var/lib/ceph/osd/ceph-0 /block[ceph205] [WARNIN] Running command: chown -h ceph:ceph /var/lib/ceph/osd/ceph-0 /block[ceph205] [WARNIN] Running command: chown -R ceph:ceph /dev/dm-2 [ceph205] [WARNIN] Running command: chown -R ceph:ceph /var/lib/ceph/osd/ceph-0 [ceph205] [WARNIN] Running command: systemctl enable ceph-volume@lvm-0 -07 ec31da-a1ba-48 ee-a833-37 a6eba0615f[ceph205] [WARNIN] stderr: Created symlink from /etc/systemd/system/multi-user.target .wants/ceph-volume@lvm-0 -07 ec31da-a1ba-48 ee-a833-37 a6eba0615f.service to /usr/lib/systemd/system/ceph-volume@.service .[ceph205] [WARNIN] Running command: systemctl enable --runtime ceph-osd@0 [ceph205] [WARNIN] stderr: Created symlink from /run/systemd/system/ceph-osd.target .wants/ceph-osd@0 .service to /usr/lib/systemd/system/ceph-osd@.service .[ceph205] [WARNIN] Running command: systemctl start ceph-osd@0 [ceph205] [WARNIN] --> ceph-volume lvm activate successful for osd ID: 0 [ceph205] [WARNIN] --> ceph-volume lvm create successful for : /dev/sdb[ceph205] [INFO ] checking OSD status...[ceph205] [DEBUG ] find the location of an executable[ceph205] [INFO ] Running command: sudo /bin/ceph --cluster =ceph osd stat --format =json[ceph_deploy.osd] [DEBUG ] Host ceph205 is now ready for osd use.

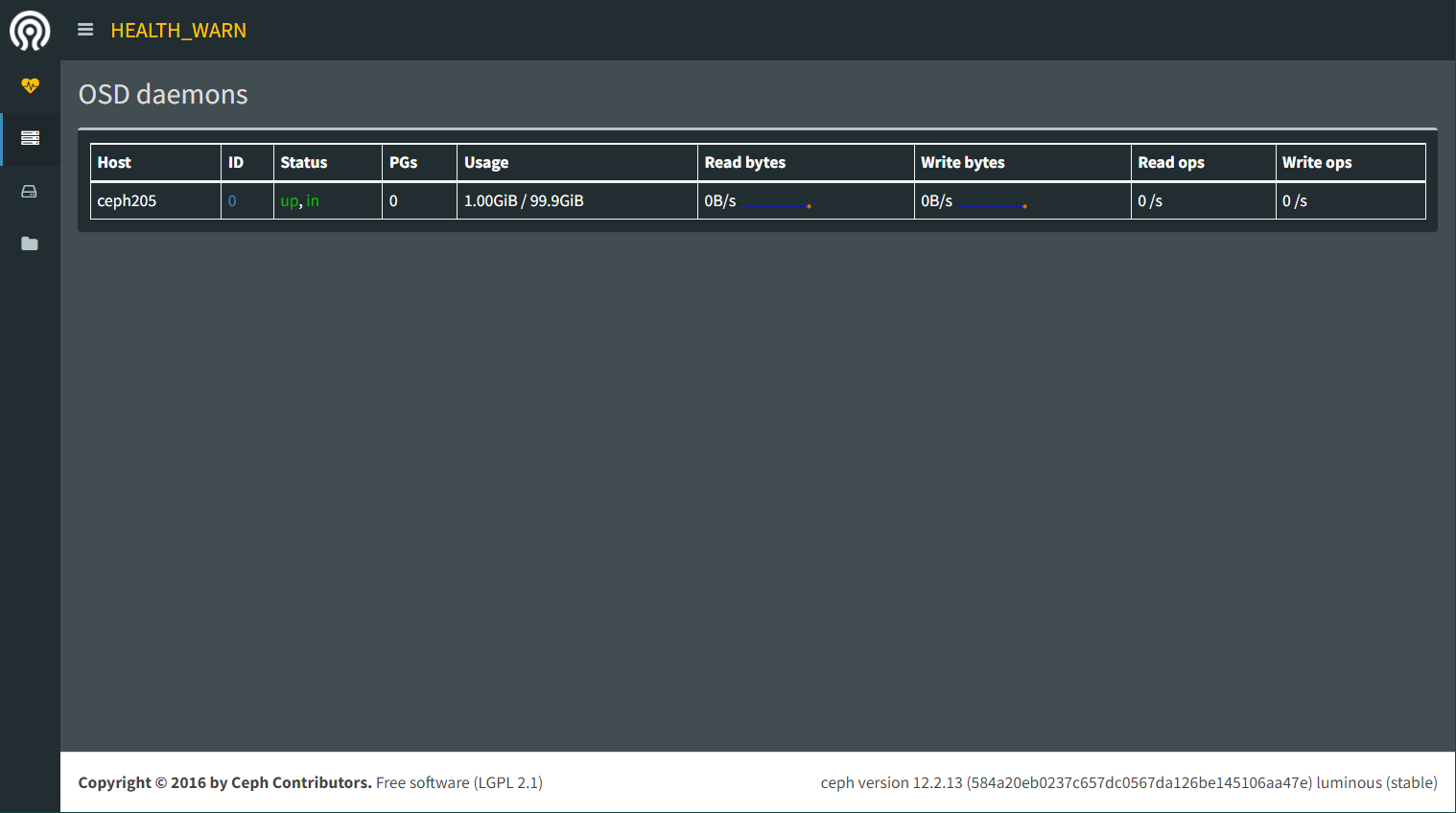

查看osd状态 在管理节点查看

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 [cephde@ceph205 cephcluster]$ ceph-deploy osd list ceph205DEBUG ] found configuration file at: /home/ceph/.cephdeploy.confINFO ] Invoked (2.0.1): /bin/ceph-deploy osd list ceph205INFO ] ceph-deploy options:INFO ] username : NoneINFO ] verbose : False INFO ] debug : False INFO ] overwrite_conf : False INFO ] subcommand : listINFO ] quiet : False INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fa82a791320>INFO ] cluster : cephINFO ] host : ['ceph205' ]INFO ] func : <function osd at 0x7fa82a7c5848>INFO ] ceph_conf : NoneINFO ] default_release : False DEBUG ] connection detected need for sudoDEBUG ] connected to host: ceph205 DEBUG ] detect platform information from remote hostDEBUG ] detect machine type DEBUG ] find the location of an executableINFO ] Distro info: CentOS Linux 7.5.1804 CoreDEBUG ] Listing disks on ceph205.. .DEBUG ] find the location of an executableINFO ] Running command: sudo /usr/sbin/ceph-volume lvm listDEBUG ] DEBUG ] DEBUG ] ====== osd.0 =======DEBUG ] DEBUG ] [block] /dev/ceph-5605d7c5-8a23-4e96-ba0d-6633fcdfde09/osd-block-07ec31da-a1ba-48ee-a833-37a6eba0615fDEBUG ] DEBUG ] type blockDEBUG ] osd id 0DEBUG ] cluster fsid b6904020-ab52-497b-9078-c130310853bbDEBUG ] cluster name cephDEBUG ] osd fsid 07ec31da-a1ba-48ee-a833-37a6eba0615fDEBUG ] encrypted 0DEBUG ] cephx lockbox secret DEBUG ] block uuid rP8tcN-oDS7-ALLN-S2iA-wW8F-nIcz-m73oWhDEBUG ] block device /dev/ceph-5605d7c5-8a23-4e96-ba0d-6633fcdfde09/osd-block-07ec31da-a1ba-48ee-a833-37a6eba0615fDEBUG ] vdo 0DEBUG ] crush device class NoneDEBUG ] devices /dev/sdb

在管理节点查看osd状态等

1 2 3 4 5 6 7 [cephde@ceph205 cephcluster]$ sudo ceph osd stat1 osds: 1 up, 1 in CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF 1 0.09769 root default 3 0.09769 host ceph205 0 hdd 0.09769 osd.0 up 1.00000 1.00000

在管理节点查看容量及使用情况

1 2 3 4 5 6 [cephde@ceph205 cephcluster]$ sudo ceph dfSIZE AVAIL RAW USED %RAW USED 100 GiB 99.0 GiB 1.00 GiB 1.00 NAME ID USED %USED MAX AVAIL OBJECTS

在osd节点查看

1 2 3 4 5 6 7 8 9 10 11 [cephde@ceph205 cephcluster]$ lsblk 0 20G 0 disk 0 1G 0 part /boot 0 19G 0 part 0 17G 0 lvm / 0 2G 0 lvm [SWAP] 0 100G 0 disk 0 100G 0 lvm 1 1024M 0 rom

ceph-osd进程,根据启动顺序,每个osd进程有特定的序号

1 2 3 4 5 6 7 8 [cephde@ceph205 cephcluster]$ systemctl status ceph-osd@0.0 system /ceph-osd@.service; enabled-runtime; vendor preset: disabled)2022 -03 -13 22 :11 :51 EDT; 3 min 30 s ago23971 ExecStartPre=/usr/lib/ceph/ceph-osd-prestart.sh --cluster ${CLUSTER} --id %i (code=exited, status=0 /SUCCESS)23976 (ceph-osd)system .slice/system -ceph\x2dosd.slice/ceph-osd@0.service23976 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

osd进程端口号;或:ps aux | grep osd | grep -v grep

1 2 3 4 5 [cephde@ceph205 cephcluster]$ sudo netstat -tunlp | grep osd0 0 192.168.1 .205:6801 0.0.0.0 :* LISTEN 23976 /ceph-osd 0 0 192.168.1 .205:6802 0.0.0.0 :* LISTEN 23976 /ceph-osd 0 0 192.168.1 .205:6803 0.0.0.0 :* LISTEN 23976 /ceph-osd 0 0 192.168.1 .205:6804 0.0.0.0 :* LISTEN 23976 /ceph-osd

或登陆mgr_dashboard:http://192.168.1.205:7000